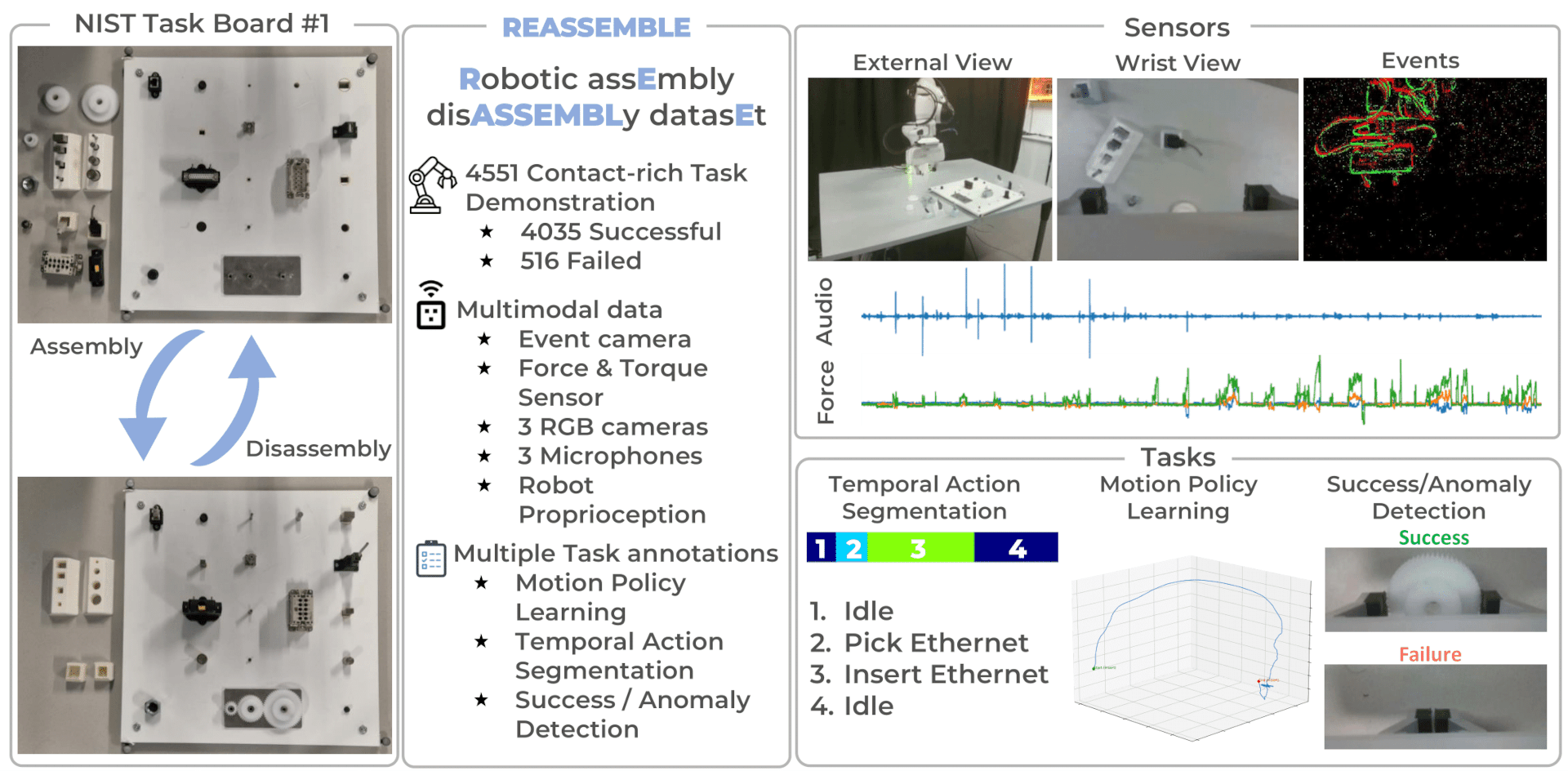

- Feb 2025: We are releasing the REASSEMBLE dataset.

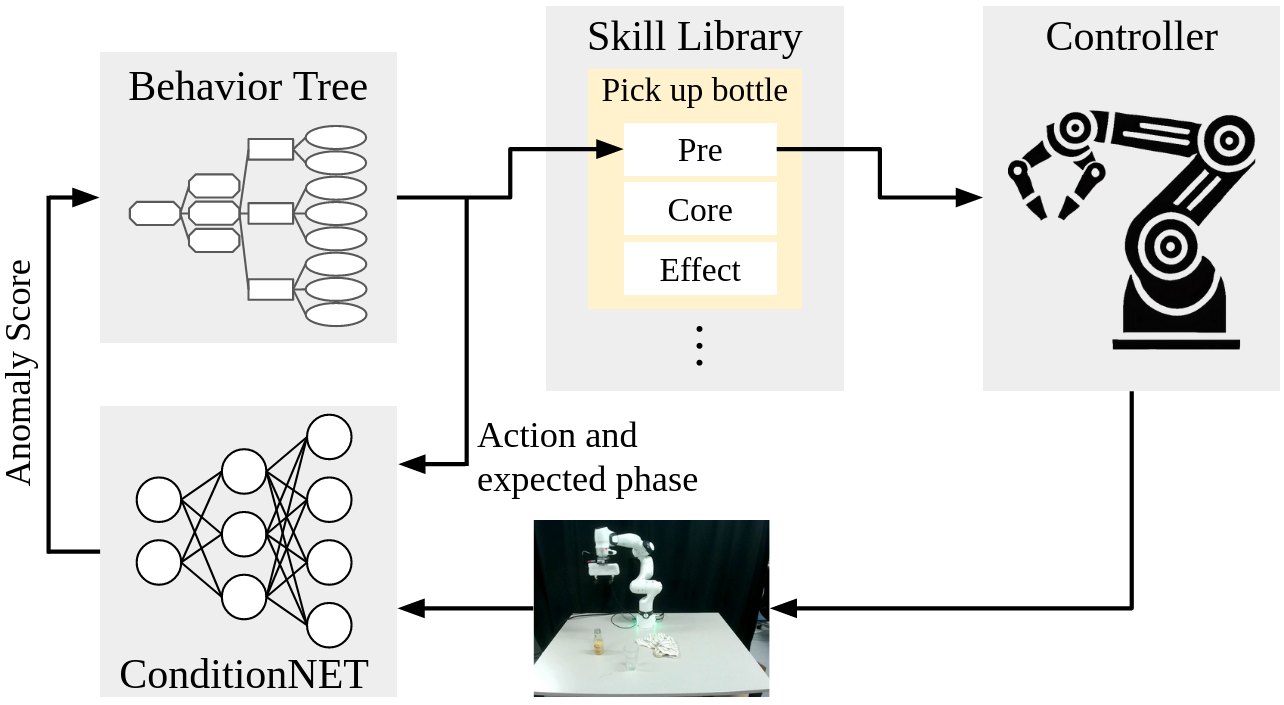

- Feb 2025: Our ConditionNET paper got accepted at Robotics and Automation Letters!

- Jan 2025: I-CTRL has been accepted to RAM Journal!

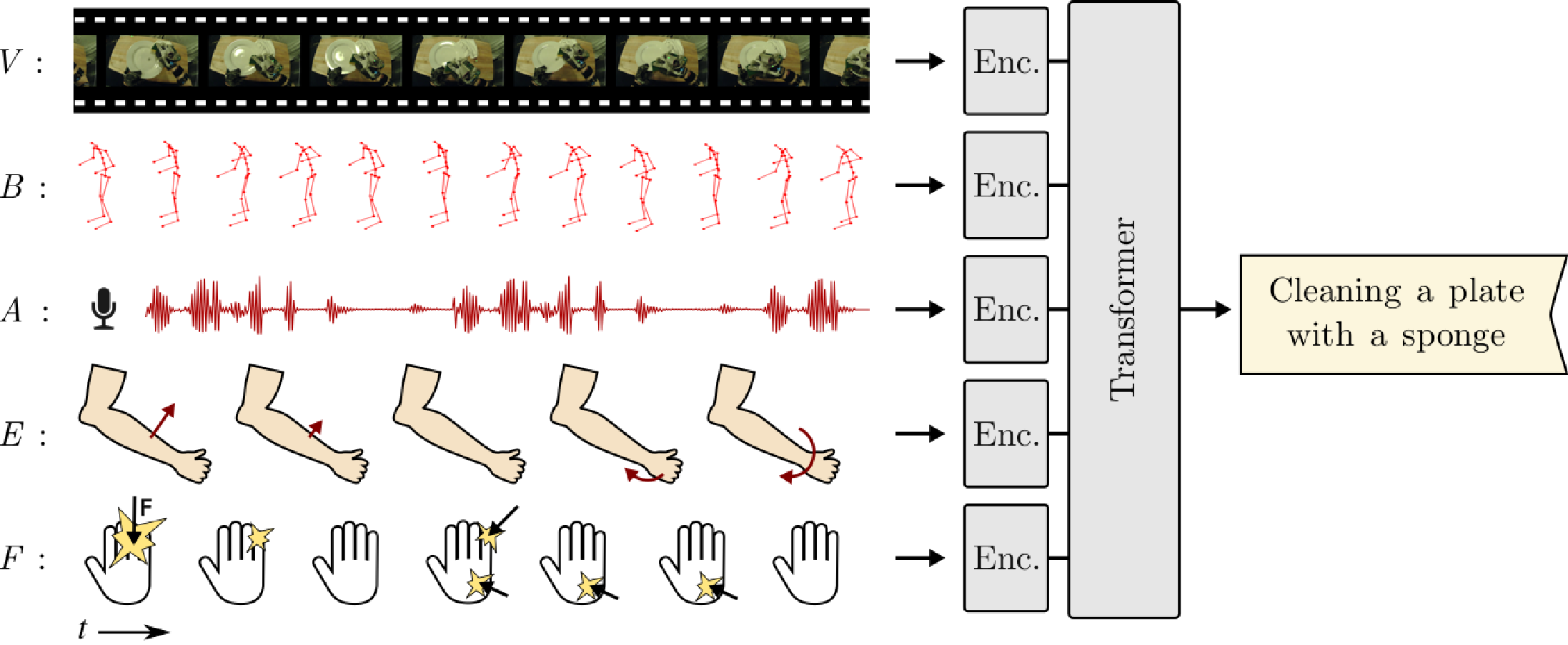

- Dec 2024: Our “Multimodal Transformer Models for Human Action Classification” paper at RiTA has won the reward of the Best Intelligence Paper.

- Sep 2024: Our “Multimodal Transformer Models for Human Action Classification” paper was accepted at RiTA.

- Sep 2024: Self-AWare has been accepted to Humanoids 2024 and HFR 2024.

- Jun 2024: I-CTRL is out! Take a look to control any bipedal humanoid robots by imitating any human motion.

- Dec 2023: SALADS has been accepted to ICRA 2024.

- Dec 2023: ECHO has been accepted to ICRA 2024.

- Dec 2023: UNIMASK-M has been accepted to AAAI 2024.

- Sep 2023: ImitationNet has been accepted to Humanoids 2023.

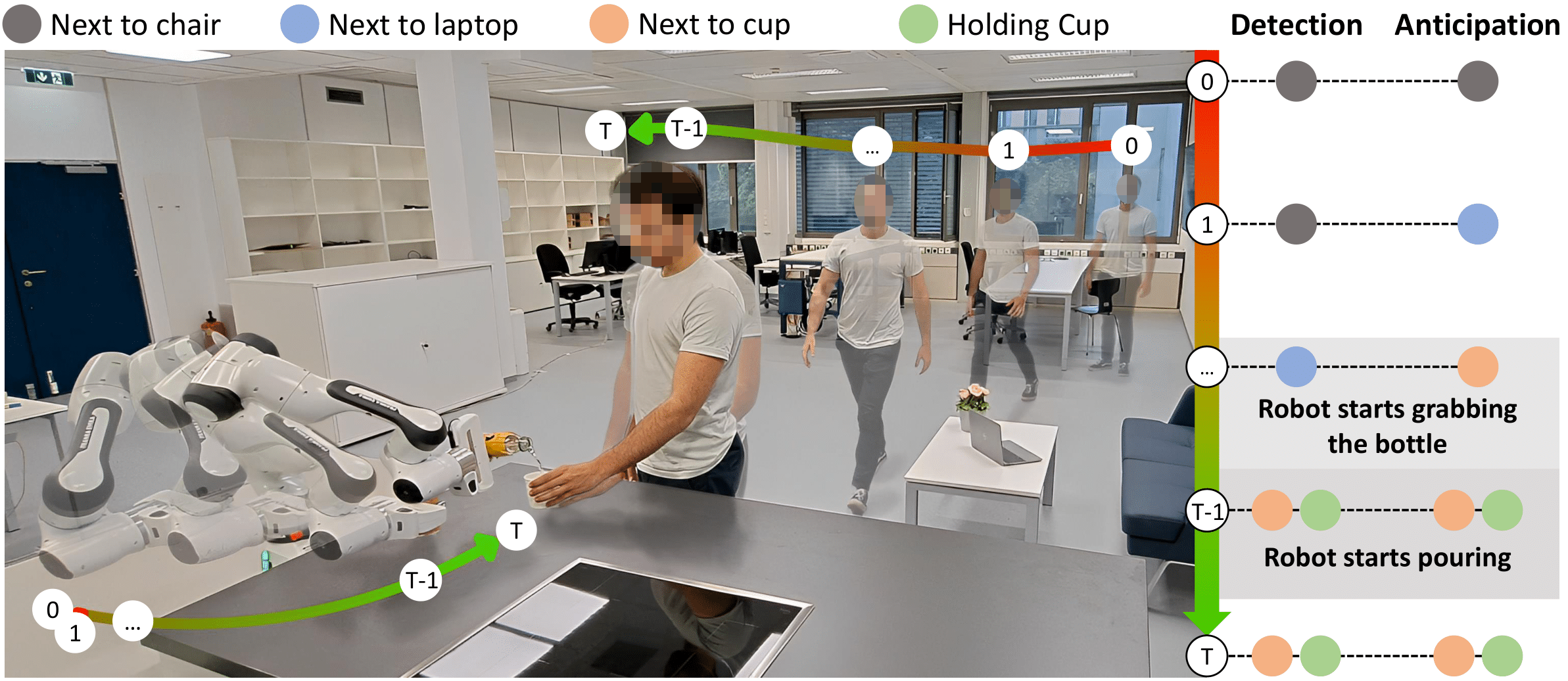

- Sep 2023: HOI4ABOT has been accepted to CoRL 2023.

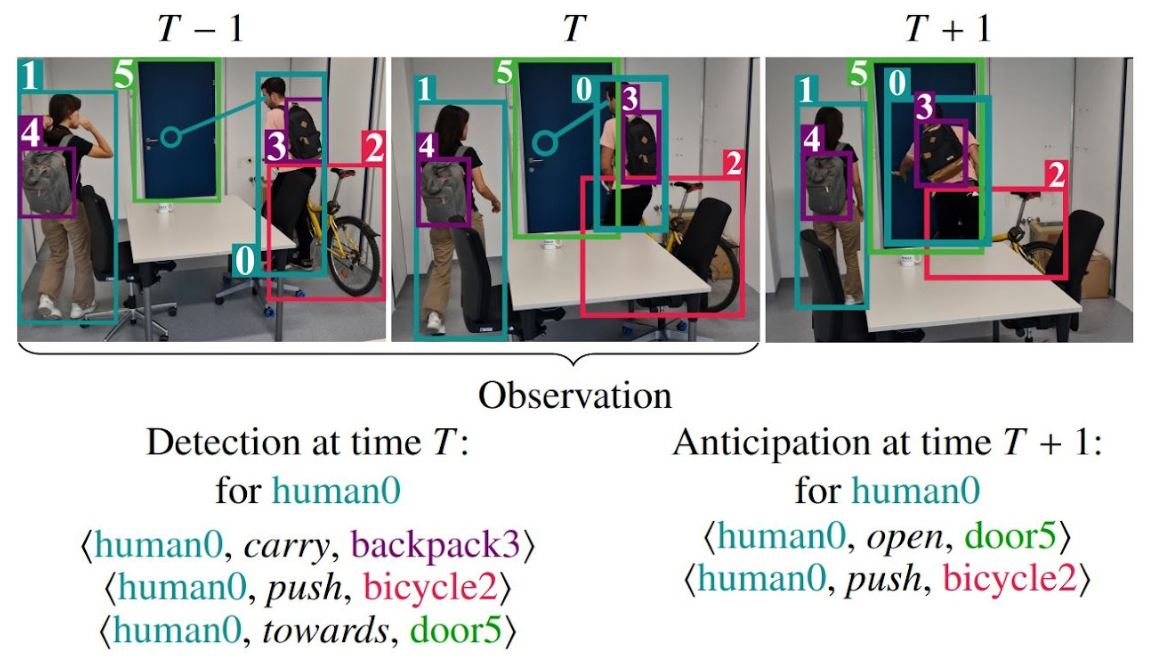

- June 2023: HOI-Gaze has been accepted to CVIU Journal 2023.

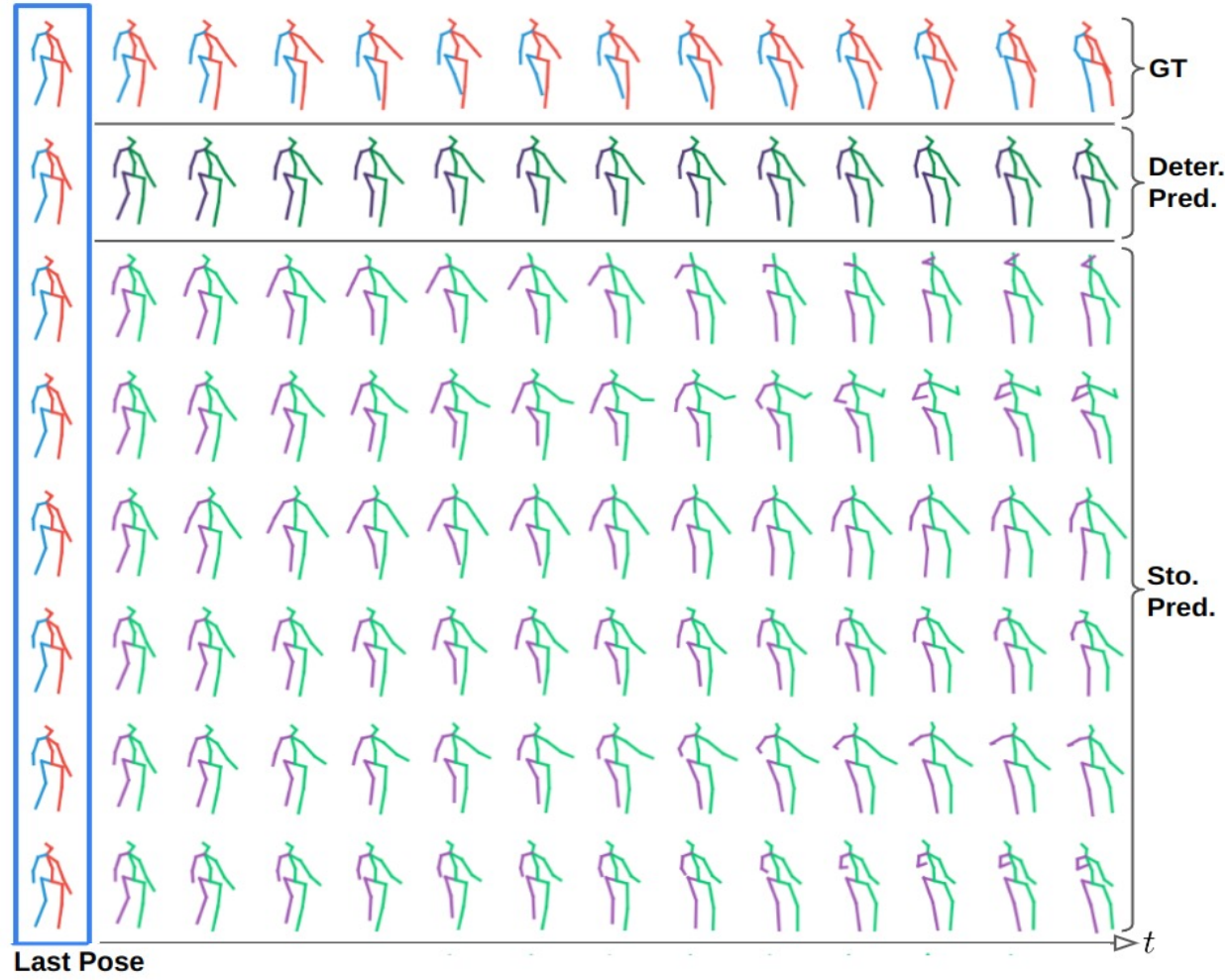

- Jan 2023: DiffusionMotion has been accepted to ICRA 2023.

- Nov 2022: I-CVAE has been accepted to WACV 2023.

- Oct 2022: We won the ECCV@2022 Ego4D Long-Term Action Anticipation Challenge: First Place Award with I-CVAE.

- Jun 2022: We won the CVPR@2022 Ego4D Long-Term Action Anticipation Challenge: First Place Award with I-CVAE.

- Apr 2022: 2CHTR has been accepted to IROS 2022.

Autonomous Systems Lab

Autonomous Systems Lab

At the Autonomous Systems Lab we aim at innovative research on cognitive robot motor skill learning and control based on human motion understanding. The main focus of the research is two-fold: autonomous learning from observations in daily life and cognitive robot control. In order to realize an intuitive robot and to satisfy humans expectations for a robotic companion, we study about human beings and transfer the discovered mechanisms to robotic systems. In this way, the robot can learn new skills without engineers programming and learn complicate tasks incrementally in a generalized framework. Especially by bridging learning from observations, robot motor control, and learning from self practices, robots will be capable of performing complex tasks robustly under uncertainties.